Reality Studio

Client Project at The Devhouse Agency, 2021

Reality Studio is a set of two applications and a Unity Editor tool developed solely by me. Its goal is to create an environment where interior design layouts can be quickly and easily created, changed, and viewed in a high fidelity AR experience by someone who is inexperienced with traditional 3d software.

Simulation Modeling

UTD ATEC Capstone Project, Fall 2020

A simulated volume using Position-Based Dynamics that can be interacted with in real time. It has a dynamically generated and updated mesh that can be exported to a .obj file. This was my senior capstone project at UTD. See the project page for in depth on my development process.

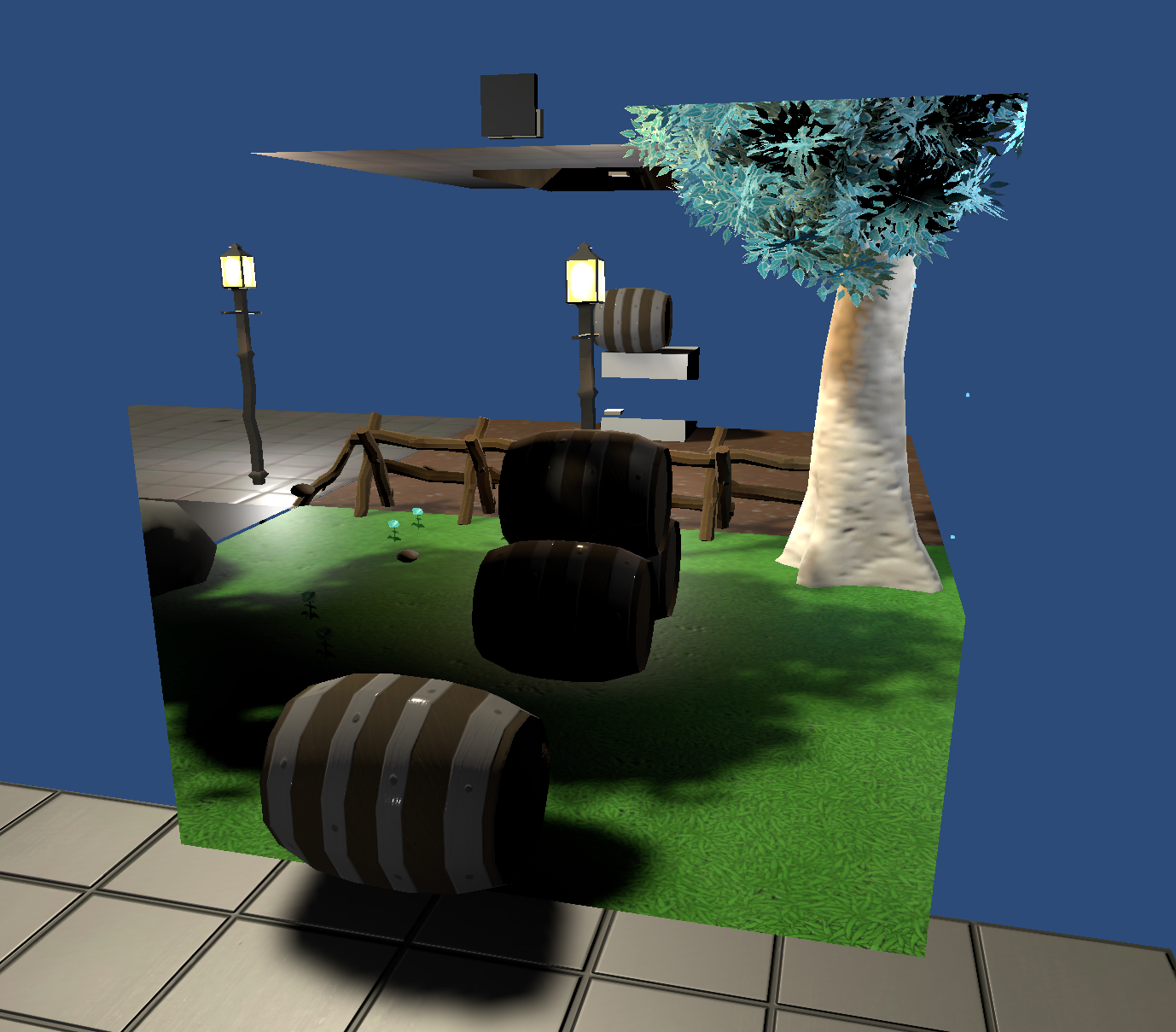

Portals

Personal Project, 2019

I have created multiple versions of portals/mirrors in Unity, improving with each iteration:

-(Old) A visual-only portal/mirror that renders to a texture on a plane.

-(Current) A visual and functional portal that uses a screen-space shader.

-(WIP) A visual and functional portal that combines the ideas of the previous two in order to make it seamless and extremely efficient, potentially allowing for many recursions.

Wisps

UTD Virtual Environments Class, Spring 2019

A basic life simulation made in Unity that uses a Compute Shader to determine the behavior of each wisp.

Gravity

Personal Project, 2019

A simple gravity simulation with many particles using Unity’s Compute Shaders.

I am currently using Particle-Particle physics, but plan to try out a couple methods of approximation later.

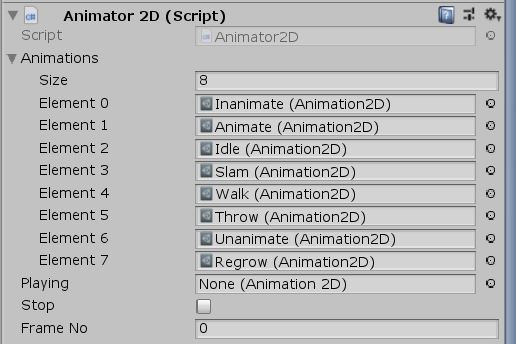

Sprite Animation Controller

Personal Project, Fall 2018

A state-based animator for 2d Unity that uses sprite sheets.

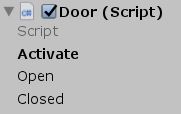

Logic Gate Puzzle System

Personal Project, 2018

A basic logic system to use with puzzles in my WIP game “Geldamin’s Vault”.